The minimum viable product of every DevOps solution is to reliably deploy an application in an automated fashion without any developer interaction (outside of optionally clicking a start button). Regardless of what pipeline platform you have available, your first consideration will be to follow a “push” or “pull” methodology for your pipeline.

In the scenario of IdentityIQ and using a pipeline platform like GitLab DevOps Platform, IIQ will likely be hosted on-prem, and a GitLab Runner can be installed on the IIQ server and execute SSB build and deploy tasks as if it were a human developer. This scenario is an example of a pull methodology where the host server pulls the project from source control and then builds and deploys on that host server. The pull methodology is an easy way to set up a deployment pipeline with minimal resources. Still, it becomes infeasible when you expand pipeline functionality to include pre and post-deployment testing due to the runner/agent siphoning resources from the running IIQ server when performing these continuous tests triggered by git commits. The pull methodology also suffers from another weakness concerning high availability, where a failed deployment may not only bring down the IIQ server but also the runner/agent, thereby requiring intervention from a developer. In the case of IdentityNow, the pull approach can not be used with a SaaS application. The pull methodology isn’t inherently a bad approach. It is usually a team’s first pass at building a CI/CD pipeline to determine the value of investing time into developing their DevOps solution.

The push methodology eliminates many of these issues and, most importantly, can be used in both IIQ and IDN projects. In this approach, a build server on-prem or in the cloud is utilized separately from the IIQ server (or IDN tenant) and runs the pipeline platform runner/agent. The runner/agent then handles all testing, building, and deploying relevant artifacts to the IIQ or IDN application. While more complicated, this approach prevents resource drain on the IIQ servers, offers high availability and resilience by being separately hosted and capable of redeploying artifacts during a disaster recovery event, and is the only approach supported by IDN. Many additional benefits to this approach will be explained later in the series.

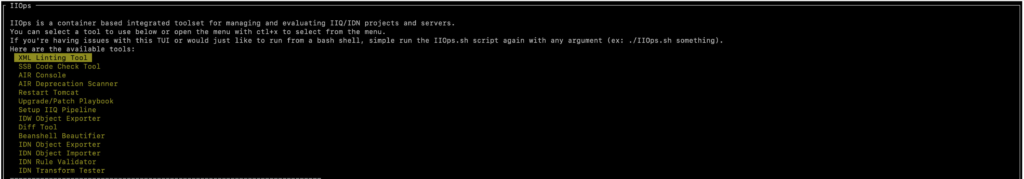

The next question to arise after deciding on the push methodology is, “What does this build server look like”? For some teams, it’s a CentOS IaaS server running a Jenkins agent; for some, it’s a shared GitLab runner running in an on-prem CaaS environment; for others, it’s Azure DevOps all in the cloud. We see many different stacks between our clients, and while yours may not change as often, there is always the possibility of a shift. For this reason, we rely on containerization technologies like Docker and Podman to eliminate compatibility issues no matter the environment. Service containers have become increasingly popular and provide a portable platform for build dependencies, deployment frameworks, and testing tools. In our solution’s container image, for example, every tool can be run locally by a developer without any prior setup outside of Docker or Podman, and this same image is leveraged in a pipeline to handle both IIQ and IDN deployment and testing tasks. Due to the lightweight nature of containers, a build server can be almost anything and opens the doors to pseudo-edge computing pipelines where developer workstations can even be leveraged as runners/agents in a pool instead of a dedicated build server.

The initial conversation around containers is often the largest challenge of building a pipeline, as many organizations may not already utilize containerization technology. One argument is the cost of using Docker. Docker began charging larger organizations for their product in the last couple of years. Fortunately, RedHat released the free Podman containerization platform, which offers nearly 1:1 parity in features to Docker. Podman is also available in the default repositories of many RedHat distros and can be installed with a single-line command. In regards to security concerns, containers offer far greater security by only existing during the duration of a pipeline’s runtime. Containers are created from the container image during the start of a pipeline job. They can be injected with sensitive information from a password vault or secret storage and destroyed at the end of every job rather than having a persistent build server with secrets stored in the file system. Finally, there are the concerns about containerization being a new and niche technology and its overall adoption by the workforce. As of the 2023 StackOverflow survey, Docker is the most used developer tool and the number one most-desired developer tool rated by respondents. There are more SMEs around containerization technologies than ever to help with learning curves and new features added daily. Knowledge from containerized application developers can be quickly applied in the service container space, empowering developers to leverage the benefits of containers even if they lack the background or the application they are supporting isn’t containerized.

To summarize, across all of our clients, we’ve found the best way to build a powerful and robust IIQ and IDN pipeline is to use a push methodology leveraging a service container to test, build, and deploy to any environment. This approach allows you to leverage whatever technology you have available as a build server, utilize whatever pipeline platform you currently have, and meet high availability, scalability, and security requirements.

In the following posts for this series, we’ll focus on phase 1 of our DevOps solution, Linting and Code Check. These posts will be specific for IIQ and IDN but utilize the same service container image. During live implementations at client sites, we follow these phases in numerical order, each providing certain access milestones to reach the final pipeline.