Continuing the discussion from In Sync & Secure: CI/CD Design Challenges and Considerations:

Phase 1 of the DevOps solution standup process focuses on the many pre-deployment tests you can run to prevent issues from reaching your IdentityIQ environment and can leverage both SailPoint and custom tooling to meet your testing needs. Phase 1 also acts as the initial check step to verify that your build infrastructure is provisioned with enough resources, a container registry has been selected for your service container image, firewall exceptions have been made between your source control platform and build infrastructure, and that you have accessible artifact storage for the output test reports and artifacts later created in phases 2 and 3.

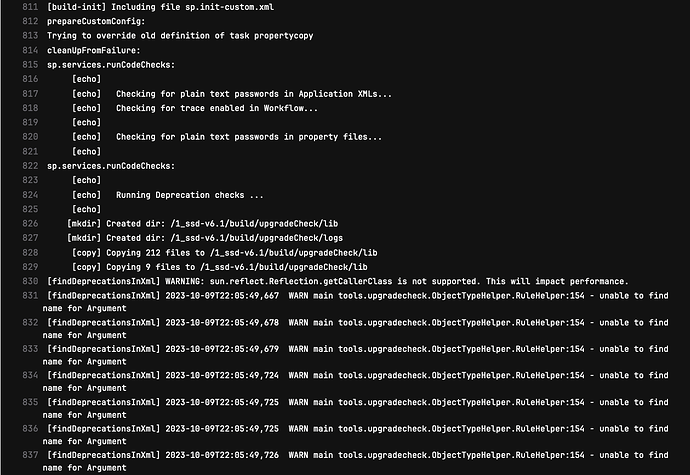

To start, we create a service container with the necessary dependencies to run a regular SSB build. You can test with an existing IIQ project or use a clean SSB/SSD project directly from SailPoint. Once you’ve verified that a build can be completed from start to finish in a container, you can then leverage the out-of-the-box SSB/SSD ANT jobs present to perform basic linting, deprecation checks, log checks, etc, and translate these to your pipeline yaml. From here, we upload the service container image to the selected container registry, add the reference to this container in the pipeline yaml, and kick off our first pipeline run. At this point, you’ll have your standard SSB dialogue displaying in your pipeline logs, giving you plain text results of linting and code check tests.

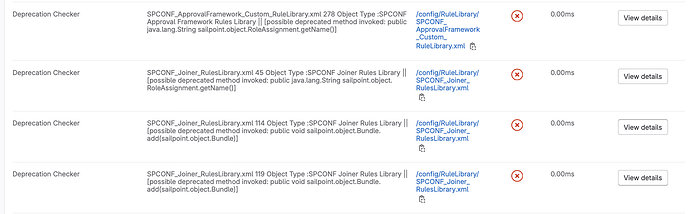

These tests are still “dumb” because they won’t trigger an outright failure in the pipeline and may take longer than needed as the build performs all steps to create the war file. To optimize these, we modify the ANT jobs and store these modified jobs in the container to refrain from modifying the existing IIQ repo so that manual builds and deployments can occur if needed. To provide compatible reports for pipelines and parse for failures, we leverage custom tooling developed in languages like Python due to its ease of use and excellent capabilities regarding file parsing. Using Python tooling, we can overlay our modified and custom ANT jobs, trigger the ANT jobs while monitoring output in real-time, and generate completely custom JUnit test reports supported by almost all pipeline platforms.

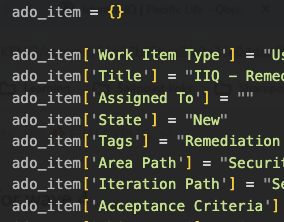

Now that we have out-of-the-box tests running with proper error handling and meaningful pipeline reports, we can extend features beyond what SSB/SSD offers. At this point, we have already loaded many dependencies needed to run Python tools in our container and see how easily file parsing can be completed using Python. Python is also great at interfacing with web resources by having an extremely easy-to-use API request library and many application-specific libraries for web applications like Azure DevOps. We then combine these many strengths to do much more advanced pre-deployment tests such as target.properties file comparisons to identify missing tokens between environments and check token values for things like web addresses and perform heartbeat checks for these addresses before finding issues via test connect in the IIQ UI. Taking this further, we can add loop-back functionality via API request libraries to our Python tools. Using this and a badge generation library, we can push artifacts back to the repository and provision badges for high visibility.

![]()

We can then go even further, leveraging this loop-back functionality with the previously implemented log parsing functionality to open bugfix tickets on an IAM team’s project management board or even make the correction and push the fix to the repo directly without developer interaction!

At this point, questions may arise about the security of having a service account piloted by a Python tool. As discussed in the previous blog post, due to the service container’s ethereal nature coupled with service account data stored in a password vault and only used during pipeline runtime, we can ensure safe connectivity to these resources and, in turn, prepare for password handling during phase 2: Build and Deploy.

To summarize, this is the approach we took to build our pre-deployment testing functionality, sharing only a small portion of the capabilities that our container has now. The key takeaway is that by including the bare essentials needed for an IIQ build and Python in our service container, we created an easily expandible pre-deployment testing framework. The best starting point, though, is to create a container that can run the official SailPoint tool first then expand functionality from there. The dependencies used in these pre-deployment testing tools will be used in later phases, like phase 2, where we will leverage the popular infrastructure as code tool Ansible (written in Python) to interface with our IIQ servers during deployment. Next in the series, I’ll cover how we perform similar tests described here against an IdentityNow environment where we will lean more heavily on custom tooling than official SailPoint tools, followed by phase 2: Build and Deploy for both applications. If you’d like to know any more information or see a demo on the topics discussed in this post, please feel free to contact me via chat or ask below!